sklearn.model_selection.permutation_test_score¶

- sklearn.model_selection.permutation_test_score(estimator, X, y, labels=None, cv=None, n_permutations=100, n_jobs=1, random_state=0, verbose=0, scoring=None)[source]¶

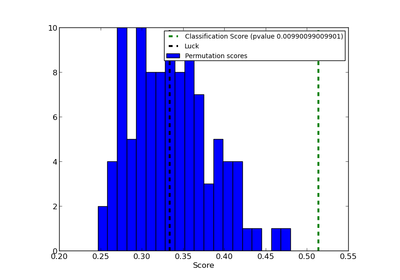

Evaluate the significance of a cross-validated score with permutations

Read more in the User Guide.

Parameters: estimator : estimator object implementing ‘fit’

The object to use to fit the data.

X : array-like of shape at least 2D

The data to fit.

y : array-like

The target variable to try to predict in the case of supervised learning.

labels : array-like, with shape (n_samples,), optional

Group labels for the samples used while splitting the dataset into train/test set.

scoring : string, callable or None, optional, default: None

A string (see model evaluation documentation) or a scorer callable object / function with signature scorer(estimator, X, y).

cv : int, cross-validation generator or an iterable, optional

Determines the cross-validation splitting strategy. Possible inputs for cv are:

- None, to use the default 3-fold cross validation,

- integer, to specify the number of folds in a (Stratified)KFold,

- An object to be used as a cross-validation generator.

- An iterable yielding train, test splits.

For integer/None inputs, StratifiedKFold is used for classification tasks, when y is binary or multiclass.

See the sklearn.model_selection module for the list of cross-validation strategies that can be used here.

Also refer cross-validation documentation

n_permutations : integer, optional

Number of times to permute y.

n_jobs : integer, optional

The number of CPUs to use to do the computation. -1 means ‘all CPUs’.

random_state : RandomState or an int seed (0 by default)

A random number generator instance to define the state of the random permutations generator.

verbose : integer, optional

The verbosity level.

Returns: score : float

The true score without permuting targets.

permutation_scores : array, shape (n_permutations,)

The scores obtained for each permutations.

pvalue : float

The returned value equals p-value if scoring returns bigger numbers for better scores (e.g., accuracy_score). If scoring is rather a loss function (i.e. when lower is better such as with mean_squared_error) then this is actually the complement of the p-value: 1 - p-value.

Notes

This function implements Test 1 in:

Ojala and Garriga. Permutation Tests for Studying Classifier Performance. The Journal of Machine Learning Research (2010) vol. 11